A company ingests sales transaction data using Amazon Data Firehose into Amazon OpenSearch Service. The Firehose buffer interval is set to 60 seconds.

The company needs sub-second latency for a real-time OpenSearch dashboard.

Which architectural change will meet this requirement?

A construction company is using Amazon SageMaker AI to train specialized custom object detection models to identify road damage. The company uses images from multiple cameras. The images are stored as JPEG objects in an Amazon S3 bucket.

The images need to be pre-processed by using computationally intensive computer vision techniques before the images can be used in the training job. The company needs to optimize data loading and pre-processing in the training job. The solution cannot affect model performance or increase compute or storage resources.

Which solution will meet these requirements?

A company is creating an ML model to identify defects in a product. The company has gathered a dataset and has stored the dataset in TIFF format in Amazon S3. The dataset contains 200 images in which the most common defects are visible. The dataset also contains 1,800 images in which there is no defect visible.

An ML engineer trains the model and notices poor performance in some classes. The ML engineer identifies a class imbalance problem in the dataset.

What should the ML engineer do to solve this problem?

An ML engineer is setting up a CI/CD pipeline for an ML workflow in Amazon SageMaker AI. The pipeline must automatically retrain, test, and deploy a model whenever new data is uploaded to an Amazon S3 bucket. New data files are approximately 10 GB in size. The ML engineer also needs to track model versions for auditing.

Which solution will meet these requirements?

A financial company receives a high volume of real-time market data streams from an external provider. The streams consist of thousands of JSON records every second.

The company needs to implement a scalable solution on AWS to identify anomalous data points.

Which solution will meet these requirements with the LEAST operational overhead?

A company plans to use Amazon SageMaker AI to build image classification models. The company has 6 TB of training data stored on Amazon FSx for NetApp ONTAP. The file system is in the same VPC as SageMaker AI.

An ML engineer must make the training data accessible to SageMaker AI training jobs.

Which solution will meet these requirements?

An ML engineer wants to run a training job on Amazon SageMaker AI by using multiple GPUs. The training dataset is stored in Apache Parquet format.

The Parquet files are too large to fit into the memory of the SageMaker AI training instances.

Which solution will fix the memory problem?

A company is building an Amazon SageMaker AI pipeline for an ML model. The pipeline uses distributed processing and training.

An ML engineer needs to encrypt network communication between instances that run distributed jobs. The ML engineer configures the distributed jobs to run in a private VPC.

What should the ML engineer do to meet the encryption requirement?

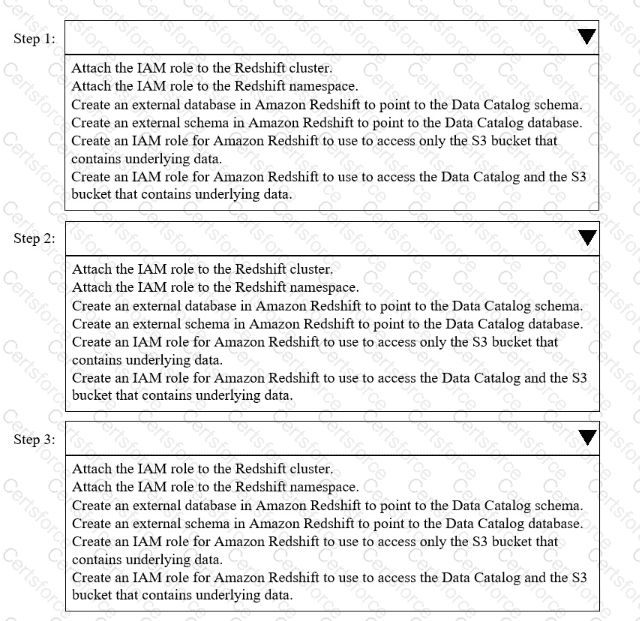

A company needs to combine data from multiple sources. The company must use Amazon Redshift Serverless to query an AWS Glue Data Catalog database and underlying data that is stored in an Amazon S3 bucket.

Select and order the correct steps from the following list to meet these requirements. Select each step one time or not at all. (Select and order three.)

• Attach the IAM role to the Redshift cluster.

• Attach the IAM role to the Redshift namespace.

• Create an external database in Amazon Redshift to point to the Data Catalog schema.

• Create an external schema in Amazon Redshift to point to the Data Catalog database.

• Create an IAM role for Amazon Redshift to use to access only the S3 bucket that contains underlying data.

• Create an IAM role for Amazon Redshift to use to access the Data Catalog and the S3 bucket that contains underlying data.

An ML engineer wants to deploy a workflow that processes streaming IoT sensor data and periodically retrains ML models. The most recent model versions must be deployed to production.

Which service will meet these requirements?