A data scientist is working on a large dataset in Apache Spark using PySpark. The data scientist has a DataFramedfwith columnsuser_id,product_id, andpurchase_amountand needs to perform some operations on this data efficiently.

Which sequence of operations results in transformations that require a shuffle followed by transformations that do not?

What is the risk associated with this operation when converting a large Pandas API on Spark DataFrame back to a Pandas DataFrame?

A developer is working with a pandas DataFrame containing user behavior data from a web application.

Which approach should be used for executing agroupByoperation in parallel across all workers in Apache Spark 3.5?

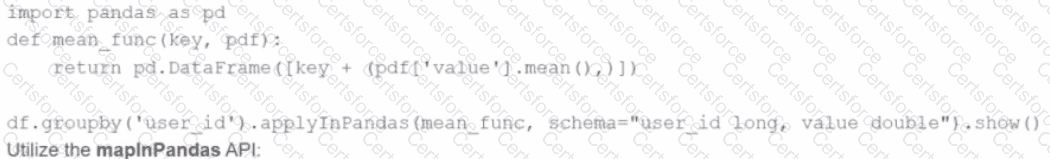

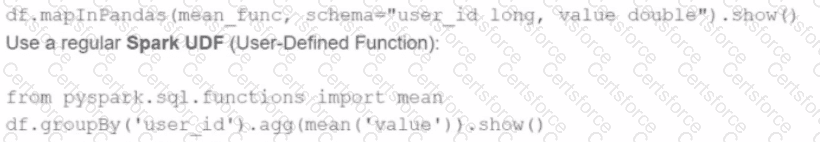

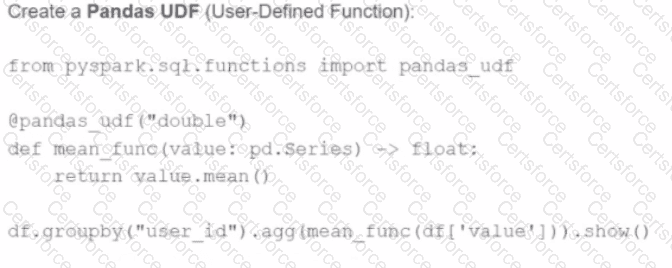

A)

Use the applylnPandas API

B)

C)

D)

A Data Analyst needs to retrieve employees with 5 or more years of tenure.

Which code snippet filters and shows the list?

What is the behavior for functiondate_sub(start, days)if a negative value is passed into thedaysparameter?

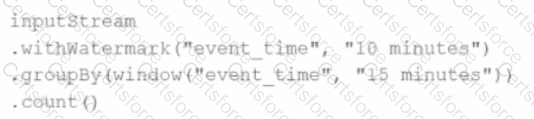

Given this code:

.withWatermark("event_time","10 minutes")

.groupBy(window("event_time","15 minutes"))

.count()

What happens to data that arrives after the watermark threshold?

Options:

An engineer notices a significant increase in the job execution time during the execution of a Spark job. After some investigation, the engineer decides to check the logs produced by the Executors.

How should the engineer retrieve the Executor logs to diagnose performance issues in the Spark application?

A data engineer uses a broadcast variable to share a DataFrame containing millions of rows across executors for lookup purposes. What will be the outcome?

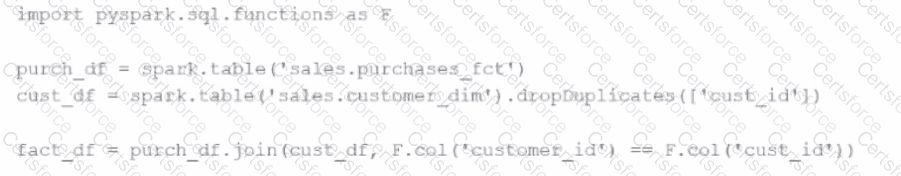

A developer is trying to join two tables,sales.purchases_fctandsales.customer_dim, using the following code:

fact_df = purch_df.join(cust_df, F.col('customer_id') == F.col('custid'))

The developer has discovered that customers in thepurchases_fcttable that do not exist in thecustomer_dimtable are being dropped from the joined table.

Which change should be made to the code to stop these customer records from being dropped?

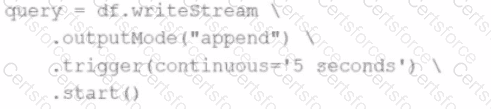

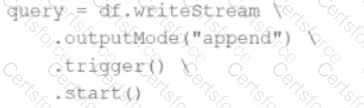

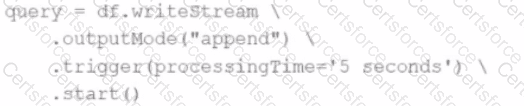

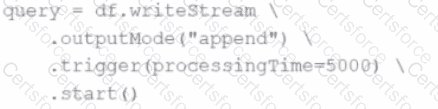

A data engineer is working on a real-time analytics pipeline using Apache Spark Structured Streaming. The engineer wants to process incoming data and ensure that triggers control when the query is executed. The system needs to process data in micro-batches with a fixed interval of 5 seconds.

Which code snippet the data engineer could use to fulfil this requirement?

A)

B)

C)

D)

Options: