Files from multiple data sources arrive in an Amazon S3 bucket on a regular basis. A data engineer wants to ingest new files into Amazon Redshift in near real time when the new files arrive in the S3 bucket.

Which solution will meet these requirements?

A company has multiple applications that use datasets that are stored in an Amazon S3 bucket. The company has an ecommerce application that generates a dataset that contains personally identifiable information (PII). The company has an internal analytics application that does not require access to the PII.

To comply with regulations, the company must not share PII unnecessarily. A data engineer needs to implement a solution that with redact PII dynamically, based on the needs of each application that accesses the dataset.

Which solution will meet the requirements with the LEAST operational overhead?

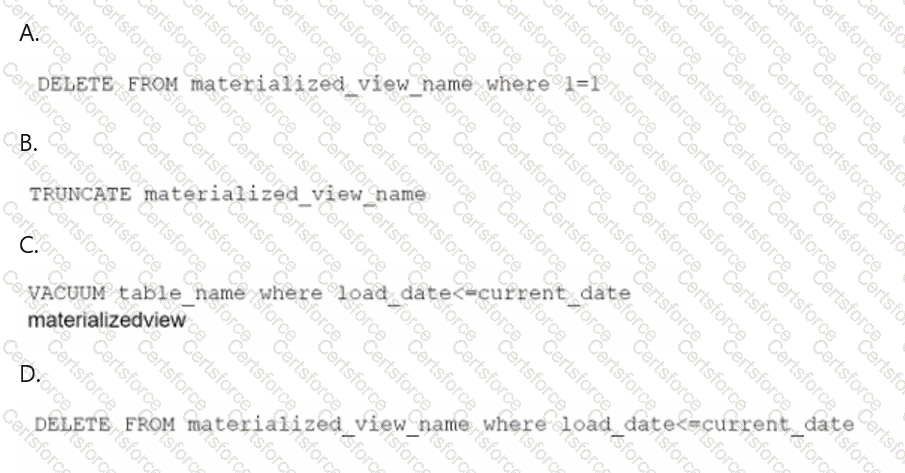

A data engineer maintains a materialized view that is based on an Amazon Redshift database. The view has a column named load_date that stores the date when each row was loaded.

The data engineer needs to reclaim database storage space by deleting all the rows from the materialized view.

Which command will reclaim the MOST database storage space?

A data engineer is using Amazon Athena to analyze sales data that is in Amazon S3. The data engineer writes a query to retrieve sales amounts for 2023 for several products from a table named sales_data. However, the query does not return results for all of the products that are in the sales_data table. The data engineer needs to troubleshoot the query to resolve the issue.

The data engineer's original query is as follows:

SELECT product_name, sum(sales_amount)

FROM sales_data

WHERE year = 2023

GROUP BY product_name

How should the data engineer modify the Athena query to meet these requirements?

A company stores its processed data in an S3 bucket. The company has a strict data access policy. The company uses IAM roles to grant teams within the company different levels of access to the S3 bucket.

The company wants to receive notifications when a user violates the data access policy. Each notification must include the username of the user who violated the policy.

Which solution will meet these requirements?

A company uses a variety of AWS and third-party data stores. The company wants to consolidate all the data into a central data warehouse to perform analytics. Users need fast response times for analytics queries.

The company uses Amazon QuickSight in direct query mode to visualize the data. Users normally run queries during a few hours each day with unpredictable spikes.

Which solution will meet these requirements with the LEAST operational overhead?

A data engineer maintains custom Python scripts that perform a data formatting process that many AWS Lambda functions use. When the data engineer needs to modify the Python scripts, the data engineer must manually update all the Lambda functions.

The data engineer requires a less manual way to update the Lambda functions.

Which solution will meet this requirement?

A company needs to implement a new inventory management system that provides near real-time updates and visibility across all AWS Regions. The new solution must provide centralized access control over data access and permissions. The company has a separate inventory management team assigned to each Region. Each inventory management team needs to update inventory levels.

A data engineer must implement Amazon Redshift data sharing with write capabilities. The solution must follow the principle of least privilege.

Which solution will meet these requirements with the LEAST operational overhead?

A company uses AWS Glue ETL pipelines to process data. The company uses Amazon Athena to analyze data in an Amazon S3 bucket.

To better understand shipping timelines, the company decides to collect and store shipping dates and delivery dates in addition to order data. The company adds a data quality check to ensure that the shipping date is later than the order date and that the delivery date is later than the shipping date. Orders that fail the quality check must be stored in a second Amazon S3 bucket.

Which solution will meet these requirements in the MOST cost-effective way?

A data engineer must implement Amazon Redshift Serverless as a data warehouse for a company. The data engineer needs to integrate multiple Amazon Aurora MySQL databases into Amazon Redshift. The solution must maintain near real-time latency and minimize infrastructure management as much as possible.

Which solution will meet these requirements?