An Architect has a table called leader_follower that contains a single column named JSON. The table has one row with the following structure:

{

"activities": [

{ "activityNumber": 1, "winner": 5 },

{ "activityNumber": 2, "winner": 4 }

],

"follower": {

"name": { "default": "Matt" },

"number": 4

},

"leader": {

"name": { "default": "Adam" },

"number": 5

}

}

Which query will produce the following results?

ACTIVITY_NUMBER

WINNER_NAME

1

Adam

2

Matt

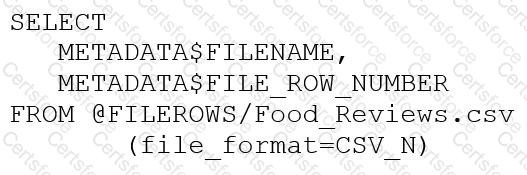

An Architect runs the following SQL query:

How can this query be interpreted?

A Snowflake Architect is designing a multi-tenant application strategy for an organization in the Snowflake Data Cloud and is considering using an Account Per Tenant strategy.

Which requirements will be addressed with this approach? (Choose two.)

Which security, governance, and data protection features require, at a MINIMUM, the Business Critical edition of Snowflake? (Choose two.)

You are a snowflake architect in an organization. The business team came to to deploy an use case which requires you to load some data which they can visualize through tableau. Everyday new data comes in and the old data is no longer required.

What type of table you will use in this case to optimize cost

An Architect has a design where files arrive every 10 minutes and are loaded into a primary database table using Snowpipe. A secondary database is refreshed every hour with the latest data from the primary database.

Based on this scenario, what Time Travel query options are available on the secondary database?

Assuming all Snowflake accounts are using an Enterprise edition or higher, in which development and testing scenarios would be copying of data be required, and zero-copy cloning not be suitable? (Select TWO).

An Architect needs to design a data unloading strategy for Snowflake, that will be used with the COPY INTO

Which configuration is valid?

When loading data into a table that captures the load time in a column with a default value of either CURRENT_TIME () or CURRENT_TIMESTAMP() what will occur?

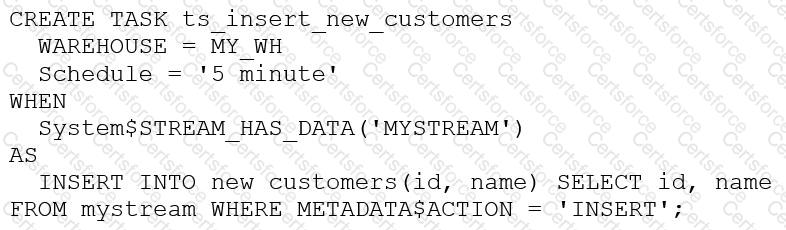

The following DDL command was used to create a task based on a stream:

Assuming MY_WH is set to auto_suspend – 60 and used exclusively for this task, which statement is true?