Which of the following SQL keywords can be used to convert a table from a long format to a wide format?

What Databricks feature can be used to check the data sources and tables used in a workspace?

A data engineer runs a statement every day to copy the previous day’s sales into the table transactions. Each day’s sales are in their own file in the location "/transactions/raw".

Today, the data engineer runs the following command to complete this task:

After running the command today, the data engineer notices that the number of records in table transactions has not changed.

Which of the following describes why the statement might not have copied any new records into the table?

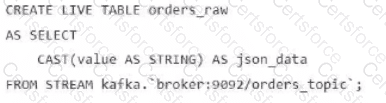

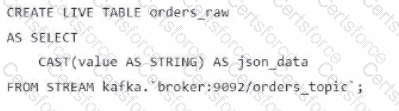

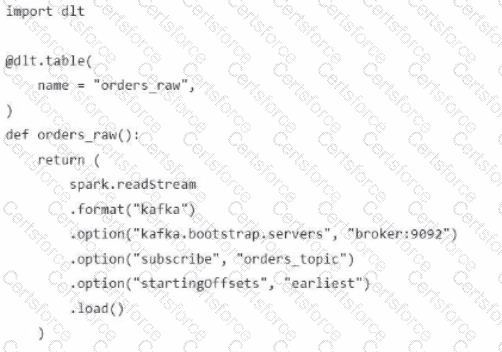

A data engineer streams customer orders into a Kafka topic (orders_topic) and is currently writing the ingestion script of a DLT pipeline. The data engineer needs to ingest the data from Kafka brokers to DLT using Databricks

What is the correct code for ingesting the data?

A)

B)

C)

D)

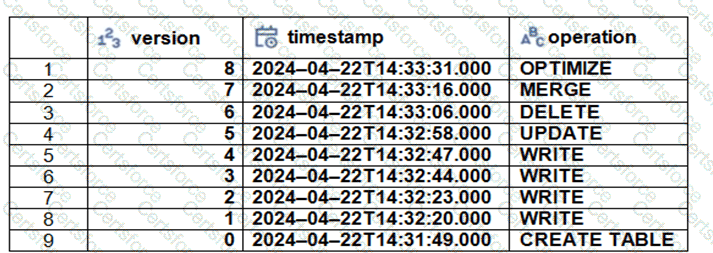

The Delta transaction log for the ‘students’ tables is shown using the ‘DESCRIBE HISTORY students’ command. A Data Engineer needs to query the table as it existed before the UPDATE operation listed in the log.

Which command should the Data Engineer use to achieve this? (Choose two.)

In which of the following scenarios should a data engineer use the MERGE INTO command instead of the INSERT INTO command?

Which SQL code snippet will correctly demonstrate a Data Definition Language (DDL) operation used to create a table?

A data engineer has joined an existing project and they see the following query in the project repository:

CREATE STREAMING LIVE TABLE loyal_customers AS

SELECT customer_id -

FROM STREAM(LIVE.customers)

WHERE loyalty_level = 'high';

Which of the following describes why the STREAM function is included in the query?

A data engineer has three tables in a Delta Live Tables (DLT) pipeline. They have configured the pipeline to drop invalid records at each table. They notice that some data is being dropped due to quality concerns at some point in the DLT pipeline. They would like to determine at which table in their pipeline the data is being dropped.

Which of the following approaches can the data engineer take to identify the table that is dropping the records?

Which of the following describes the relationship between Bronze tables and raw data?