A Spark application suffers from too many small tasks due to excessive partitioning. How can this be fixed without a full shuffle?

Options:

A data engineer observes that an upstream streaming source sends duplicate records, where duplicates share the same key and have at most a 30-minute difference in event_timestamp. The engineer adds:

dropDuplicatesWithinWatermark("event_timestamp", "30 minutes")

What is the result?

What is the benefit of using Pandas on Spark for data transformations?

Options:

A data analyst builds a Spark application to analyze finance data and performs the following operations: filter, select, groupBy, and coalesce.

Which operation results in a shuffle?

A data engineer wants to create an external table from a JSON file located at /data/input.json with the following requirements:

Create an external table named users

Automatically infer schema

Merge records with differing schemas

Which code snippet should the engineer use?

Options:

18 of 55.

An engineer has two DataFrames — df1 (small) and df2 (large). To optimize the join, the engineer uses a broadcast join:

from pyspark.sql.functions import broadcast

df_result = df2.join(broadcast(df1), on="id", how="inner")

What is the purpose of using broadcast() in this scenario?

A Spark engineer must select an appropriate deployment mode for the Spark jobs.

What is the benefit of using cluster mode in Apache Spark™?

17 of 55.

A data engineer has noticed that upgrading the Spark version in their applications from Spark 3.0 to Spark 3.5 has improved the runtime of some scheduled Spark applications.

Looking further, the data engineer realizes that Adaptive Query Execution (AQE) is now enabled.

Which operation should AQE be implementing to automatically improve the Spark application performance?

1 of 55. A data scientist wants to ingest a directory full of plain text files so that each record in the output DataFrame contains the entire contents of a single file and the full path of the file the text was read from.

The first attempt does read the text files, but each record contains a single line. This code is shown below:

txt_path = "/datasets/raw_txt/*"

df = spark.read.text(txt_path) # one row per line by default

df = df.withColumn("file_path", input_file_name()) # add full path

Which code change can be implemented in a DataFrame that meets the data scientist's requirements?

A data engineer is working on a real-time analytics pipeline using Apache Spark Structured Streaming. The engineer wants to process incoming data and ensure that triggers control when the query is executed. The system needs to process data in micro-batches with a fixed interval of 5 seconds.

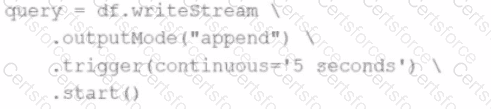

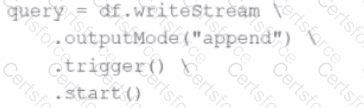

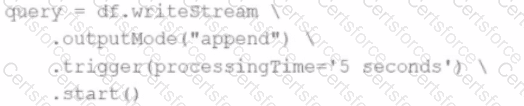

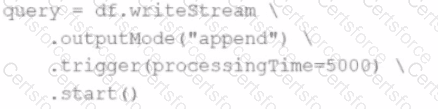

Which code snippet the data engineer could use to fulfil this requirement?

A)

B)

C)

D)

Options: