49 of 55.

In the code block below, aggDF contains aggregations on a streaming DataFrame:

aggDF.writeStream \

.format("console") \

.outputMode("???") \

.start()

Which output mode at line 3 ensures that the entire result table is written to the console during each trigger execution?

What is a feature of Spark Connect?

43 of 55.

An organization has been running a Spark application in production and is considering disabling the Spark History Server to reduce resource usage.

What will be the impact of disabling the Spark History Server in production?

How can a Spark developer ensure optimal resource utilization when running Spark jobs in Local Mode for testing?

Options:

39 of 55.

A Spark developer is developing a Spark application to monitor task performance across a cluster.

One requirement is to track the maximum processing time for tasks on each worker node and consolidate this information on the driver for further analysis.

Which technique should the developer use?

34 of 55.

A data engineer is investigating a Spark cluster that is experiencing underutilization during scheduled batch jobs.

After checking the Spark logs, they noticed that tasks are often getting killed due to timeout errors, and there are several warnings about insufficient resources in the logs.

Which action should the engineer take to resolve the underutilization issue?

A data scientist is analyzing a large dataset and has written a PySpark script that includes several transformations and actions on a DataFrame. The script ends with a collect() action to retrieve the results.

How does Apache Spark™'s execution hierarchy process the operations when the data scientist runs this script?

10 of 55.

What is the benefit of using Pandas API on Spark for data transformations?

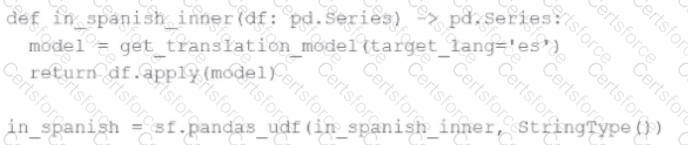

An MLOps engineer is building a Pandas UDF that applies a language model that translates English strings into Spanish. The initial code is loading the model on every call to the UDF, which is hurting the performance of the data pipeline.

The initial code is:

def in_spanish_inner(df: pd.Series) -> pd.Series:

model = get_translation_model(target_lang='es')

return df.apply(model)

in_spanish = sf.pandas_udf(in_spanish_inner, StringType())

How can the MLOps engineer change this code to reduce how many times the language model is loaded?

What is the benefit of Adaptive Query Execution (AQE)?