What is accomplished by producing data to a topic with a message key?

You want to connect with username and password to a secured Kafka cluster that has SSL encryption.

Which properties must your client include?

(You are implementing a Kafka Streams application to process financial transactions.

Each transaction must be processed exactly once to ensure accuracy.

The application reads from an input topic, performs computations, and writes results to an output topic.

During testing, you notice duplicate entries in the output topic, which violates the exactly-once processing requirement.

You need to ensure exactly-once semantics (EOS) for this Kafka Streams application.

Which step should you take?)

(You want to enrich the content of a topic by joining it with key records from a second topic.

The two topics have a different number of partitions.

Which two solutions can you use?

Select two.)

(You are developing a Java application that includes a Kafka consumer.

You need to integrate Kafka client logs with your own application logs.

Your application is using the Log4j2 logging framework.

Which Java library dependency must you include in your project?)

You are creating a Kafka Streams application to process retail data.

Match the input data streams with the appropriate Kafka Streams object.

(You are writing to a Kafka topic with producer configuration acks=all.

The producer receives acknowledgements from the broker but still creates duplicate messages due to network timeouts and retries.

You need to ensure that duplicate messages are not created.

Which producer configuration should you set?)

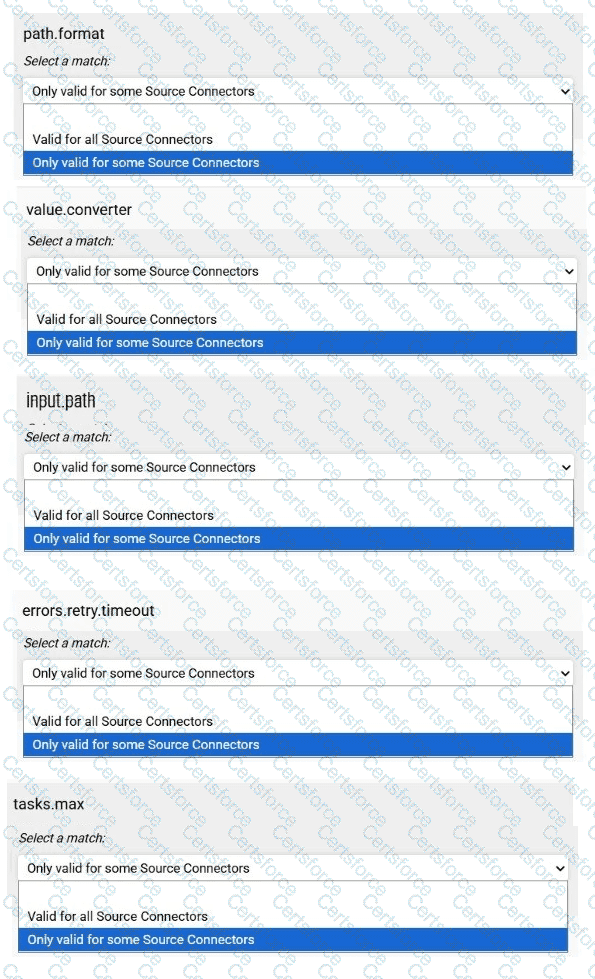

Match each configuration parameter with the correct option.

To answer choose a match for each option from the drop-down. Partial

credit is given for each correct answer.

A stream processing application is consuming from a topic with five partitions. You run three instances of the application. Each instance has num.stream.threads=5.

You need to identify the number of stream tasks that will be created and how many will actively consume messages from the input topic.

You have a Kafka client application that has real-time processing requirements.

Which Kafka metric should you monitor?