On the latest Health Check report from your Cloud TEST environment utilizing a MongoDB add-on, you note the following findings:

Category: User Experience, Description: # of slow query rules, Risk: High

Category: User Experience, Description: # of slow write to data store nodes, Risk: High

Which three things might you do to address this, without consulting the business?

Reduce the batch size for database queues to 10.

Optimize the database execution using standard database performance troubleshooting methods and tools (such as query execution plans).

Reduce the size and complexity of the inputs. If you are passing in a list, consider whether the data model can be redesigned to pass single values instead.

Optimize the database execution. Replace the view with a materialized view.

Use smaller CDTs or limit the fields selected in a!queryEntity().

Comprehensive and Detailed In-Depth Explanation:

The Health Check report indicates high-risk issues with slow query rules and slow writes to data store nodes in a MongoDB-integrated Appian Cloud TEST environment. As a Lead Developer, you can address these performance bottlenecks without business consultation by focusing on technical optimizations within Appian and MongoDB. The goal is to improve user experience by reducing query and write latency.

Option B (Optimize the database execution using standard database performance troubleshooting methods and tools (such as query execution plans)):This is a critical step. Slow queries and writes suggest inefficient database operations. Using MongoDB’s explain() or equivalent tools to analyze execution plans can identify missing indices, suboptimal queries, or full collection scans. Appian’s Performance Tuning Guide recommends optimizing database interactions by adding indices on frequently queried fields or rewriting queries (e.g., using projections to limit returned data). This directly addresses both slow queries and writes without business input.

Option C (Reduce the size and complexity of the inputs. If you are passing in a list, consider whether the data model can be redesigned to pass single values instead):Large or complex inputs (e.g., large arrays in a!queryEntity() or write operations) can overwhelm MongoDB, especially in Appian’s data store integration. Redesigning the data model to handle single values or smaller batches reduces processing overhead. Appian’s Best Practices for Data Store Design suggest normalizing data or breaking down lists into manageable units, which can mitigate slow writes and improve query performance without requiring business approval.

Option E (Use smaller CDTs or limit the fields selected in a!queryEntity()): Appian Custom Data Types (CDTs) and a!queryEntity() calls that return excessive fields can increase data transfer and processing time, contributing to slow queries. Limiting fields to only those needed (e.g., using fetchTotalCount selectively) or using smaller CDTs reduces the load on MongoDB and Appian’s engine. This optimization is a technical adjustment within the developer’s control, aligning with Appian’s Query Optimization Guidelines.

Option A (Reduce the batch size for database queues to 10):While adjusting batch sizes can help with write performance, reducing it to 10 without analysis might not address the root cause and could slow down legitimate operations. This requires testing and potentially business input on acceptable performance trade-offs, making it less immediate.

Option D (Optimize the database execution. Replace the view with a materialized view):Materialized views are not natively supported in MongoDB (unlike relational databases like PostgreSQL), and Appian’s MongoDB add-on relies on collection-based storage. Implementing this would require significant redesign or custom aggregation pipelines, which may exceed the scope of a unilateral technical fix and could impact business logic.

These three actions (B, C, E) leverage Appian and MongoDB optimization techniques, addressing both query and write performance without altering business requirements or processes.

As part of an upcoming release of an application, a new nullable field is added to a table that contains customer data. The new field is used by a report in the upcoming release and is calculated using data from another table.

Which two actions should you consider when creating the script to add the new field?

Create a script that adds the field and leaves it null.

Create a rollback script that removes the field.

Create a script that adds the field and then populates it.

Create a rollback script that clears the data from the field.

Add a view that joins the customer data to the data used in calculation.

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, adding a new nullable field to a database table for an upcoming release requires careful planning to ensure data integrity, report functionality, and rollback capability. The field is used in a report and calculated from another table, so the script must handle both deployment and potential reversibility. Let’s evaluate each option:

A. Create a script that adds the field and leaves it null:Adding a nullable field and leaving it null is technically feasible (e.g., using ALTER TABLE ADD COLUMN in SQL), but it doesn’t address the report’s need for calculated data. Since the field is used in a report and calculated from another table, leaving it null risks incomplete or incorrect reporting until populated, delaying functionality. Appian’s data management best practices recommend populating data during deployment for immediate usability, making this insufficient as a standalone action.

B. Create a rollback script that removes the field:This is a critical action. In Appian, database changes (e.g., adding a field) must be reversible in case of deployment failure or rollback needs (e.g., during testing or PROD issues). A rollback script that removes the field (e.g., ALTER TABLE DROP COLUMN) ensures the database can return to its original state, minimizing risk. Appian’s deployment guidelines emphasize rollback scripts for schema changes, making this essential for safe releases.

C. Create a script that adds the field and then populates it:This is also essential. Since the field is nullable, calculated from another table, and used in a report, populating it during deployment ensures immediate functionality. The script can use SQL (e.g., UPDATE table SET new_field = (SELECT calculated_value FROM other_table WHERE condition)) to populate data, aligning with Appian’s data fabric principles for maintaining data consistency. Appian’s documentation recommends populating new fields during deployment for reporting accuracy, making this a key action.

D. Create a rollback script that clears the data from the field:Clearing data (e.g., UPDATE table SET new_field = NULL) is less effective than removing the field entirely. If the deployment fails, the field’s existence with null values could confuse reports or processes, requiring additional cleanup. Appian’s rollback strategies favor reverting schema changes completely (removing the field) rather than leaving it with nulls, making this less reliable and unnecessary compared to B.

E. Add a view that joins the customer data to the data used in calculation:Creating a view (e.g., CREATE VIEW customer_report AS SELECT ... FROM customer_table JOIN other_table ON ...) is useful for reporting but isn’t a prerequisite for adding the field. The scenario focuses on the field addition and population, not reporting structure. While a view could optimize queries, it’s a secondary step, not a primary action for the script itself. Appian’s data modeling best practices suggest views as post-deployment optimizations, not script requirements.

Conclusion: The two actions to consider are B (create a rollback script that removes the field) and C (create a script that adds the field and then populates it). These ensure the field is added with data for immediate report usability and provide a safe rollback option, aligning with Appian’s deployment and data management standards for schema changes.

While working on an application, you have identified oddities and breaks in some of your components. How can you guarantee that this mistake does not happen again in the future?

Design and communicate a best practice that dictates designers only work within the confines of their own application.

Ensure that the application administrator group only has designers from that application’s team.

Create a best practice that enforces a peer review of the deletion of any components within the application.

Provide Appian developers with the “Designer” permissions role within Appian. Ensure that they have only basic user rights and assign them the permissions to administer their application.

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, preventing recurring “oddities and breaks” in application components requires addressing root causes—likely tied to human error, lack of oversight, or uncontrolled changes—while leveraging Appian’s governance and collaboration features. The question implies a past mistake (e.g., accidental deletions or modifications) and seeks a proactive, sustainable solution. Let’s evaluate each option based on Appian’s official documentation and best practices:

A. Design and communicate a best practice that dictates designers only work within the confines of their own application:This suggests restricting designers to their assigned applications via a policy. While Appian supports application-level security (e.g., Designer role scoped to specific applications), this approach relies on voluntary compliance rather than enforcement. It doesn’t directly address “oddities and breaks”—e.g., a designer could still mistakenly alter components within their own application. Appian’s documentation emphasizes technical controls and process rigor over broad guidelines, making this insufficient as a guarantee.

B. Ensure that the application administrator group only has designers from that application’s team:This involves configuring security so only team-specific designers have Administrator rights to the application (via Appian’s Security settings). While this limits external interference, it doesn’t prevent internal mistakes (e.g., a team designer deleting a critical component). Appian’s security model already restricts access by default, and the issue isn’t about unauthorized access but rather component integrity. This step is a hygiene factor, not a direct solution to the problem, and fails to “guarantee” prevention.

C. Create a best practice that enforces a peer review of the deletion of any components within the application:This is the best choice. A peer review process for deletions (e.g., process models, interfaces, or records) introduces a checkpoint to catch errors before they impact the application. In Appian, deletions are permanent and can cascade (e.g., breaking dependencies), aligning with the “oddities and breaks” described. While Appian doesn’t natively enforce peer reviews, this can be implemented via team workflows—e.g., using Appian’s collaboration tools (like Comments or Tasks) or integrating with version control practices during deployment. Appian Lead Developer training emphasizes change management and peer validation to maintain application stability, making this a robust, preventive measure that directly addresses the root cause.

D. Provide Appian developers with the “Designer” permissions role within Appian. Ensure that they have only basic user rights and assign them the permissions to administer their application:This option is confusingly worded but seems to suggest granting Designer system role permissions (a high-level privilege) while limiting developers to Viewer rights system-wide, with Administrator rights only for their application. In Appian, the “Designer” system role grants broad platform access (e.g., creating applications), which contradicts “basic user rights” (Viewer role). Regardless, adjusting permissions doesn’t prevent mistakes—it only controls who can make them. The issue isn’t about access but about error prevention, so this option misses the mark and is impractical due to its contradictory setup.

Conclusion: Creating a best practice that enforces a peer review of the deletion of any components (C) is the strongest solution. It directly mitigates the risk of “oddities and breaks” by adding oversight to destructive actions, leveraging team collaboration, and aligning with Appian’s recommended governance practices. Implementation could involve documenting the process, training the team, and using Appian’s monitoring tools (e.g., Application Properties history) to track changes—ensuring mistakes are caught before deployment. This provides the closest guarantee to preventing recurrence.

You are running an inspection as part of the first deployment process from TEST to PROD. You receive a notice that one of your objects will not deploy because it is dependent on an object from an application owned by a separate team.

What should be your next step?

Create your own object with the same code base, replace the dependent object in the application, and deploy to PROD.

Halt the production deployment and contact the other team for guidance on promoting the object to PROD.

Check the dependencies of the necessary object. Deploy to PROD if there are few dependencies and it is low risk.

Push a functionally viable package to PROD without the dependencies, and plan the rest of the deployment accordingly with the other team’s constraints.

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, managing a deployment from TEST to PROD requires careful handling of dependencies, especially when objects from another team’s application are involved. The scenario describes a dependency issue during deployment, signaling a need for collaboration and governance. Let’s evaluate each option:

A. Create your own object with the same code base, replace the dependent object in the application, and deploy to PROD:This approach involves duplicating the object, which introduces redundancy, maintenance risks, and potential version control issues. It violates Appian’s governance principles, as objects should be owned and managed by their respective teams to ensure consistency and avoid conflicts. Appian’s deployment best practices discourage duplicating objects unless absolutely necessary, making this an unsustainable and risky solution.

B. Halt the production deployment and contact the other team for guidance on promoting the object to PROD:This is the correct step. When an object from another application (owned by a separate team) is a dependency, Appian’s deployment process requires coordination to ensure both applications’ objects are deployed in sync. Halting the deployment prevents partial deployments that could break functionality, and contacting the other team aligns with Appian’s collaboration and governance guidelines. The other team can provide the necessary object version, adjust their deployment timeline, or resolve the dependency, ensuring a stable PROD environment.

C. Check the dependencies of the necessary object. Deploy to PROD if there are few dependencies and it is low risk:This approach risks deploying an incomplete or unstable application if the dependency isn’t fully resolved. Even with “few dependencies” and “low risk,” deploying without the other team’s object could lead to runtime errors or broken functionality in PROD. Appian’s documentation emphasizes thorough dependency management during deployment, requiring all objects (including those from other applications) to be promoted together, making this risky and not recommended.

D. Push a functionally viable package to PROD without the dependencies, and plan the rest of the deployment accordingly with the other team’s constraints:Deploying without dependencies creates an incomplete solution, potentially leaving the application non-functional or unstable in PROD. Appian’s deployment process ensures all dependencies are included to maintain application integrity, and partial deployments are discouraged unless explicitly planned (e.g., phased rollouts). This option delays resolution and increases risk, contradicting Appian’s best practices for Production stability.

Conclusion: Halting the production deployment and contacting the other team for guidance (B) is the next step. It ensures proper collaboration, aligns with Appian’s governance model, and prevents deployment errors, providing a safe and effective resolution.

You are developing a case management application to manage support cases for a large set of sites. One of the tabs in this application s site Is a record grid of cases, along with Information about the site corresponding to that case. Users must be able to filter cases by priority level and status.

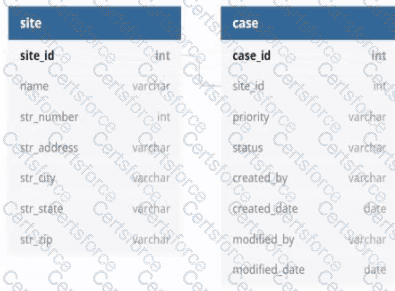

You decide to create a view as the source of your entity-backed record, which joins the separate case/site tables (as depicted in the following Image).

Which three column should be indexed?

site_id

status

name

modified_date

priority

case_id

Indexing columns can improve the performance of queries that use those columns in filters, joins, or order by clauses. In this case, the columns that should be indexed are site_id, status, and priority, because they are used for filtering or joining the tables. Site_id is used to join the case and site tables, so indexing it will speed up the join operation. Status and priority are used to filter the cases by the user’s input, so indexing them will reduce the number of rows that need to be scanned. Name, modified_date, and case_id do not need to be indexed, because they are not used for filtering or joining. Name and modified_date are only used for displaying information in the record grid, and case_id is only used as a unique identifier for each record. Verified References: Appian Records Tutorial, Appian Best Practices

As an Appian Lead Developer, optimizing a database view for an entity-backed record grid requires indexing columns frequently used in queries, particularly for filtering and joining. The scenario involves a record grid displaying cases with site information, filtered by “priority level” and “status,” and joined via the site_id foreign key. The image shows two tables (site and case) with a relationship via site_id. Let’s evaluate each column based on Appian’s performance best practices and query patterns:

A. site_id:This is a primary key in the site table and a foreign key in the case table, used for joining the tables in the view. Indexing site_id in the case table (and ensuring it’s indexed in site as a PK) optimizes JOIN operations, reducing query execution time for the record grid. Appian’s documentation recommends indexing foreign keys in large datasets to improve query performance, especially for entity-backed records. This is critical for the join and must be included.

B. status:Users filter cases by “status” (a varchar column in the case table). Indexing status speeds up filtering queries (e.g., WHERE status = 'Open') in the record grid, particularly with large datasets. Appian emphasizes indexing columns used in WHERE clauses or filters to enhance performance, making this a key column for optimization. Since status is a common filter, it’s essential.

C. name:This is a varchar column in the site table, likely used for display (e.g., site name in the grid). However, the scenario doesn’t mention filtering or sorting by name, and it’s not part of the join or required filters. Indexing name could improve searches if used, but it’s not a priority given the focus on priority and status filters. Appian advises indexing only frequently queried or filtered columns to avoid unnecessary overhead, so this isn’t necessary here.

D. modified_date:This is a date column in the case table, tracking when cases were last updated. While useful for sorting or historical queries, the scenario doesn’t specify filtering or sorting by modified_date in the record grid. Indexing it could help if used, but it’s not critical for the current requirements. Appian’s performance guidelines prioritize indexing columns in active filters, making this lower priority than site_id, status, and priority.

E. priority:Users filter cases by “priority level” (a varchar column in the case table). Indexing priority optimizes filtering queries (e.g., WHERE priority = 'High') in the record grid, similar to status. Appian’s documentation highlights indexing columns used in WHERE clauses for entity-backed records, especially with large datasets. Since priority is a specified filter, it’s essential to include.

F. case_id:This is the primary key in the case table, already indexed by default (as PKs are automatically indexed in most databases). Indexing it again is redundant and unnecessary, as Appian’s Data Store configuration relies on PKs for unique identification but doesn’t require additional indexing for performance in this context. The focus is on join and filter columns, not the PK itself.

Conclusion: The three columns to index are A (site_id), B (status), and E (priority). These optimize the JOIN (site_id) and filter performance (status, priority) for the record grid, aligning with Appian’s recommendations for entity-backed records and large datasets. Indexing these columns ensures efficient querying for user filters, critical for the application’s performance.

You add an index on the searched field of a MySQL table with many rows (>100k). The field would benefit greatly from the index in which three scenarios?

The field contains a textual short business code.

The field contains long unstructured text such as a hash.

The field contains many datetimes, covering a large range.

The field contains big integers, above and below 0.

The field contains a structured JSON.

Comprehensive and Detailed In-Depth Explanation:

Adding an index to a searched field in a MySQL table with over 100,000 rows improves query performance by reducing the number of rows scanned during searches, joins, or filters. The benefit of an index depends on the field’s data type, cardinality (uniqueness), and query patterns. MySQL indexing best practices, as aligned with Appian’s Database Optimization Guidelines, highlight scenarios where indices are most effective.

Option A (The field contains a textual short business code):This benefits greatly from an index. A short business code (e.g., a 5-10 character identifier like "CUST123") typically has high cardinality (many unique values) and is often used in WHERE clauses or joins. An index on this field speeds up exact-match queries (e.g., WHERE business_code = 'CUST123'), which are common in Appian applications for lookups or filtering.

Option C (The field contains many datetimes, covering a large range):This is highly beneficial. Datetime fields with a wide range (e.g., transaction timestamps over years) are frequently queried with range conditions (e.g., WHERE datetime BETWEEN '2024-01-01' AND '2025-01-01') or sorting (e.g., ORDER BY datetime). An index on this field optimizes these operations, especially in large tables, aligning with Appian’s recommendation to index time-based fields for performance.

Option D (The field contains big integers, above and below 0):This benefits significantly. Big integers (e.g., IDs or quantities) with a broad range and high cardinality are ideal for indexing. Queries like WHERE id > 1000 or WHERE quantity < 0 leverage the index for efficient range scans or equality checks, a common pattern in Appian data store queries.

Option B (The field contains long unstructured text such as a hash):This benefits less. Long unstructured text (e.g., a 128-character SHA hash) has high cardinality but is less efficient for indexing due to its size. MySQL indices on large text fields can slow down writes and consume significant storage, and full-text searches are better handled with specialized indices (e.g., FULLTEXT), not standard B-tree indices. Appian advises caution with indexing large text fields unless necessary.

Option E (The field contains a structured JSON):This is minimally beneficial with a standard index. MySQL supports JSON fields, but a regular index on the entire JSON column is inefficient for large datasets (>100k rows) due to its variable structure. Generated columns or specialized JSON indices (e.g., using JSON_EXTRACT) are required for targeted queries (e.g., WHERE JSON_EXTRACT(json_col, '$.key') = 'value'), but this requires additional setup beyond a simple index, reducing its immediate benefit.

For a table with over 100,000 rows, indices are most effective on fields with high selectivity and frequent query usage (e.g., short codes, datetimes, integers), making A, C, and D the optimal scenarios.

As part of your implementation workflow, users need to retrieve data stored in a third-party Oracle database on an interface. You need to design a way to query this information.

How should you set up this connection and query the data?

Configure a Query Database node within the process model. Then, type in the connection information, as well as a SQL query to execute and return the data in process variables.

Configure a timed utility process that queries data from the third-party database daily, and stores it in the Appian business database. Then use a!queryEntity using the Appian data source to retrieve the data.

Configure an expression-backed record type, calling an API to retrieve the data from the third-party database. Then, use a!queryRecordType to retrieve the data.

In the Administration Console, configure the third-party database as a “New Data Source.” Then, use a!queryEntity to retrieve the data.

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, designing a solution to query data from a third-party Oracle database for display on an interface requires secure, efficient, and maintainable integration. The scenario focuses on real-time retrieval for users, so the design must leverage Appian’s data connectivity features. Let’s evaluate each option:

A. Configure a Query Database node within the process model. Then, type in the connection information, as well as a SQL query to execute and return the data in process variables:The Query Database node (part of the Smart Services) allows direct SQL execution against a database, but it requires manual connection details (e.g., JDBC URL, credentials), which isn’t scalable or secure for Production. Appian’s documentation discourages using Query Database for ongoing integrations due to maintenance overhead, security risks (e.g., hardcoding credentials), and lack of governance. This is better for one-off tasks, not real-time interface queries, making it unsuitable.

B. Configure a timed utility process that queries data from the third-party database daily, and stores it in the Appian business database. Then use a!queryEntity using the Appian data source to retrieve the data:This approach syncs data daily into Appian’s business database (e.g., via a timer event and Query Database node), then queries it with a!queryEntity. While it works for stale data, it introduces latency (up to 24 hours) for users, which doesn’t meet real-time needs on an interface. Appian’s best practices recommend direct data source connections for up-to-date data, not periodic caching, unless latency is acceptable—making this inefficient here.

C. Configure an expression-backed record type, calling an API to retrieve the data from the third-party database. Then, use a!queryRecordType to retrieve the data:Expression-backed record types use expressions (e.g., a!httpQuery()) to fetch data, but they’re designed for external APIs, not direct database queries. The scenario specifies an Oracle database, not an API, so this requires building a custom REST service on the Oracle side, adding complexity and latency. Appian’s documentation favors Data Sources for database queries over API calls when direct access is available, making this less optimal and over-engineered.

D. In the Administration Console, configure the third-party database as a “New Data Source.” Then, use a!queryEntity to retrieve the data:This is the best choice. In the Appian Administration Console, you can configure a JDBC Data Source for the Oracle database, providing connection details (e.g., URL, driver, credentials). This creates a secure, managed connection for querying via a!queryEntity, which is Appian’s standard function for Data Store Entities. Users can then retrieve data on interfaces using expression-backed records or queries, ensuring real-time access with minimal latency. Appian’s documentation recommends Data Sources for database integrations, offering scalability, security, and governance—perfect for this requirement.

Conclusion: Configuring the third-party database as a New Data Source and using a!queryEntity (D) is the recommended approach. It provides direct, real-time access to Oracle data for interface display, leveraging Appian’s native data connectivity features and aligning with Lead Developer best practices for third-party database integration.

You are just starting with a new team that has been working together on an application for months. They ask you to review some of their views that have been degrading in performance. The views are highly complex with hundreds of lines of SQL. What is the first step in troubleshooting the degradation?

Go through the entire database structure to obtain an overview, ensure you understand the business needs, and then normalize the tables to optimize performance.

Run an explain statement on the views, identify critical areas of improvement that can be remediated without business knowledge.

Go through all of the tables one by one to identify which of the grouped by, ordered by, or joined keys are currently indexed.

Browse through the tables, note any tables that contain a large volume of null values, and work with your team to plan for table restructure.

Comprehensive and Detailed In-Depth Explanation:

Troubleshooting performance degradation in complex SQL views within an Appian application requires a systematic approach. The views, described as having hundreds of lines of SQL, suggest potential issues with query execution, indexing, or join efficiency. As a new team member, the first step should focus on quickly identifying the root cause without overhauling the system prematurely. Appian’s Performance Troubleshooting Guide and database optimization best practices provide the framework for this process.

Option B (Run an explain statement on the views, identify critical areas of improvement that can be remediated without business knowledge):This is the recommended first step. Running an EXPLAIN statement (or equivalent, such as EXPLAIN PLAN in some databases) analyzes the query execution plan, revealing details like full table scans, missing indices, or inefficient joins. This technical analysis can identify immediate optimization opportunities (e.g., adding indices or rewriting subqueries) without requiring business input, allowing you to address low-hanging fruit quickly. Appian encourages using database tools to diagnose performance issues before involving stakeholders, making this a practical starting point as you familiarize yourself with the application.

Option A (Go through the entire database structure to obtain an overview, ensure you understand the business needs, and then normalize the tables to optimize performance):This is too broad and time-consuming as a first step. Understanding business needs and normalizing tables are valuable but require collaboration with the team and stakeholders, delaying action. It’s better suited for a later phase after initial technical analysis.

Option C (Go through all of the tables one by one to identify which of the grouped by, ordered by, or joined keys are currently indexed):Manually checking indices is useful but inefficient without first knowing which queries are problematic. The EXPLAIN statement provides targeted insights into index usage, making it a more direct initial step than a manual table-by-table review.

Option D (Browse through the tables, note any tables that contain a large volume of null values, and work with your team to plan for table restructure):Identifying null values and planning restructures is a long-term optimization strategy, not a first step. It requires team input and may not address the immediate performance degradation, which is better tackled with query-level diagnostics.

Starting with an EXPLAIN statement allows you to gather data-driven insights, align with Appian’s performance troubleshooting methodology, and proceed with informed optimizations.

An existing integration is implemented in Appian. Its role is to send data for the main case and its related objects in a complex JSON to a REST API, to insert new information into an existing application. This integration was working well for a while. However, the customer highlighted one specific scenario where the integration failed in Production, and the API responded with a 500 Internal Error code. The project is in Post-Production Maintenance, and the customer needs your assistance. Which three steps should you take to troubleshoot the issue?

Send the same payload to the test API to ensure the issue is not related to the API environment.

Send a test case to the Production API to ensure the service is still up and running.

Analyze the behavior of subsequent calls to the Production API to ensure there is no global issue, and ask the customer to analyze the API logs to understand the nature of the issue.

Obtain the JSON sent to the API and validate that there is no difference between the expected JSON format and the sent one.

Ensure there were no network issues when the integration was sent.

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer in a Post-Production Maintenance phase, troubleshooting a failed integration (HTTP 500 Internal Server Error) requires a systematic approach to isolate the root cause—whether it’s Appian-side, API-side, or environmental. A 500 error typically indicates an issue on the server (API) side, but the developer must confirm Appian’s contribution and collaborate with the customer. The goal is to select three steps that efficiently diagnose the specific scenario while adhering to Appian’s best practices. Let’s evaluate each option:

A. Send the same payload to the test API to ensure the issue is not related to the API environment:This is a critical step. Replicating the failure by sending the exact payload (from the failed Production call) to a test API environment helps determine if the issue is environment-specific (e.g., Production-only configuration) or inherent to the payload/API logic. Appian’s Integration troubleshooting guidelines recommend testing in a non-Production environment first to isolate variables. If the test API succeeds, the Production environment or API state is implicated; if it fails, the payload or API logic is suspect. This step leverages Appian’s Integration object logging (e.g., request/response capture) and is a standard diagnostic practice.

B. Send a test case to the Production API to ensure the service is still up and running:While verifying Production API availability is useful, sending an arbitrary test case risks further Production disruption during maintenance and may not replicate the specific scenario. A generic test might succeed (e.g., with simpler data), masking the issue tied to the complex JSON. Appian’s Post-Production guidelines discourage unnecessary Production interactions unless replicating the exact failure is controlled and justified. This step is less precise than analyzing existing behavior (C) and is not among the top three priorities.

C. Analyze the behavior of subsequent calls to the Production API to ensure there is no global issue, and ask the customer to analyze the API logs to understand the nature of the issue:This is essential. Reviewing subsequent Production calls (via Appian’s Integration logs or monitoring tools) checks if the 500 error is isolated or systemic (e.g., API outage). Since Appian can’t access API server logs, collaborating with the customer to review their logs is critical for a 500 error, which often stems from server-side exceptions (e.g., unhandled data). Appian Lead Developer training emphasizes partnership with API owners and using Appian’s Process History or Application Monitoring to correlate failures—making this a key troubleshooting step.

D. Obtain the JSON sent to the API and validate that there is no difference between the expected JSON format and the sent one:This is a foundational step. The complex JSON payload is central to the integration, and a 500 error could result from malformed data (e.g., missing fields, invalid types) that the API can’t process. In Appian, you can retrieve the sent JSON from the Integration object’s execution logs (if enabled) or Process Instance details. Comparing it against the API’s documented schema (e.g., via Postman or API specs) ensures Appian’s output aligns with expectations. Appian’s documentation stresses validating payloads as a first-line check for integration failures, especially in specific scenarios.

E. Ensure there were no network issues when the integration was sent:While network issues (e.g., timeouts, DNS failures) can cause integration errors, a 500 Internal Server Error indicates the request reached the API and triggered a server-side failure—not a network issue (which typically yields 503 or timeout errors). Appian’s Connected System logs can confirm HTTP status codes, and network checks (e.g., via IT teams) are secondary unless connectivity is suspected. This step is less relevant to the 500 error and lower priority than A, C, and D.

Conclusion: The three best steps are A (test API with same payload), C (analyze subsequent calls and customer logs), and D (validate JSON payload). These steps systematically isolate the issue—testing Appian’s output (D), ruling out environment-specific problems (A), and leveraging customer insights into the API failure (C). This aligns with Appian’s Post-Production Maintenance strategies: replicate safely, analyze logs, and validate data.

You need to design a complex Appian integration to call a RESTful API. The RESTful API will be used to update a case in a customer’s legacy system.

What are three prerequisites for designing the integration?

Define the HTTP method that the integration will use.

Understand the content of the expected body, including each field type and their limits.

Understand whether this integration will be used in an interface or in a process model.

Understand the different error codes managed by the API and the process of error handling in Appian.

Understand the business rules to be applied to ensure the business logic of the data.

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, designing a complex integration to a RESTful API for updating a case in a legacy system requires a structured approach to ensure reliability, performance, and alignment with business needs. The integration involves sending a JSON payload (implied by the context) and handling responses, so the focus is on technical and functional prerequisites. Let’s evaluate each option:

A. Define the HTTP method that the integration will use:This is a primary prerequisite. RESTful APIs use HTTP methods (e.g., POST, PUT, GET) to define the operation—here, updating a case likely requires PUT or POST. Appian’s Connected System and Integration objects require specifying the method to configure the HTTP request correctly. Understanding the API’s method ensures the integration aligns with its design, making this essential for design. Appian’s documentation emphasizes choosing the correct HTTP method as a foundational step.

B. Understand the content of the expected body, including each field type and their limits:This is also critical. The JSON payload for updating a case includes fields (e.g., text, dates, numbers), and the API expects a specific structure with field types (e.g., string, integer) and limits (e.g., max length, size constraints). In Appian, the Integration object requires a dictionary or CDT to construct the body, and mismatches (e.g., wrong types, exceeding limits) cause errors (e.g., 400 Bad Request). Appian’s best practices mandate understanding the API schema to ensure data compatibility, making this a key prerequisite.

C. Understand whether this integration will be used in an interface or in a process model:While knowing the context (interface vs. process model) is useful for design (e.g., synchronous vs. asynchronous calls), it’s not a prerequisite for the integration itself—it’s a usage consideration. Appian supports integrations in both contexts, and the integration’s design (e.g., HTTP method, body) remains the same. This is secondary to technical API details, so it’s not among the top three prerequisites.

D. Understand the different error codes managed by the API and the process of error handling in Appian:This is essential. RESTful APIs return HTTP status codes (e.g., 200 OK, 400 Bad Request, 500 Internal Server Error), and the customer’s API likely documents these for failure scenarios (e.g., invalid data, server issues). Appian’s Integration objects can handle errors via error mappings or process models, and understanding these codes ensures robust error handling (e.g., retry logic, user notifications). Appian’s documentation stresses error handling as a core design element for reliable integrations, making this a primary prerequisite.

E. Understand the business rules to be applied to ensure the business logic of the data:While business rules (e.g., validating case data before sending) are important for the overall application, they aren’t a prerequisite for designing the integration itself—they’re part of the application logic (e.g., process model or interface). The integration focuses on technical interaction with the API, not business validation, which can be handled separately in Appian. This is a secondary concern, not a core design requirement for the integration.

Conclusion: The three prerequisites are A (define the HTTP method), B (understand the body content and limits), and D (understand error codes and handling). These ensure the integration is technically sound, compatible with the API, and resilient to errors—critical for a complex RESTful API integration in Appian.