Which two statements are correct about transactions in Kafka?

(Select two.)

Which two producer exceptions are examples of the class RetriableException? (Select two.)

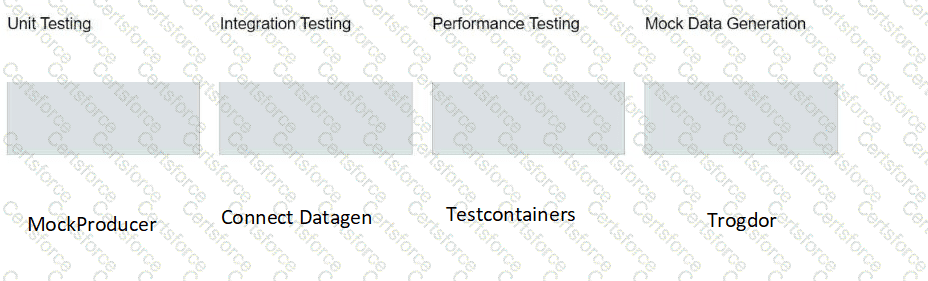

Match the testing tool with the type of test it is typically used to perform.

You are developing a Java application using a Kafka consumer.

You need to integrate Kafka’s client logs with your own application’s logs using log4j2.

Which Java library dependency must you include in your project?

Which statement describes the storage location for a sink connector’s offsets?

You need to explain the best reason to implement the consumer callback interface ConsumerRebalanceListener prior to a Consumer Group Rebalance.

Which statement is correct?

You have a consumer group with default configuration settings reading messages from your Kafka cluster.

You need to optimize throughput so the consumer group processes more messages in the same amount of time.

Which change should you make?

Your application is consuming from a topic with one consumer group.

The number of running consumers is equal to the number of partitions.

Application logs show that some consumers are leaving the consumer group during peak time, triggering a rebalance. You also notice that your application is processing many duplicates.

You need to stop consumers from leaving the consumer group.

What should you do?