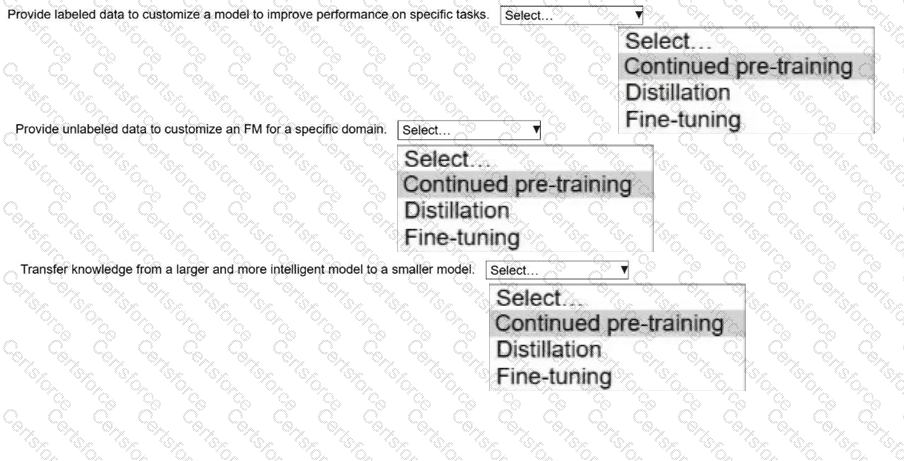

Provide labeled data to customize a model to improve performance on specific tasks.

Correct Answer: Fine-tuning

Comprehensive and Detailed Explanation (AWS AI documents):

AWS generative AI guidance defines fine-tuning as the process of adapting a pre-trained foundation model using labeled, task-specific data. Fine-tuning adjusts the model’s parameters so it performs better on a particular task, such as classification, summarization, or domain-specific reasoning.

Fine-tuning is commonly used when:

High-quality labeled data is available

The goal is to improve accuracy on a specific task

The base FM already has strong general capabilities

AWS AI Study Guide References:

AWS foundation model customization methods

AWS fine-tuning concepts for generative AI

Provide unlabeled data to customize a foundation model for a specific domain.

Correct Answer: Continued pre-training

Comprehensive and Detailed Explanation (AWS AI documents):

AWS documentation describes continued pre-training as extending the training of a foundation model using large volumes of unlabeled, domain-specific data. This method helps the model better understand domain vocabulary, structure, and context without requiring labeled datasets.

Continued pre-training is useful when:

Large amounts of unlabeled domain data are available

The goal is to improve domain understanding rather than a single task

Labeling data would be expensive or impractical

AWS AI Study Guide References:

AWS generative AI training lifecycle

AWS guidance on domain adaptation using unlabeled data

Transfer knowledge from a larger and more intelligent model to a smaller model.

Correct Answer: Distillation

Comprehensive and Detailed Explanation (AWS AI documents):

AWS generative AI materials define distillation as a technique where a smaller model (student) learns to replicate the behavior of a larger, more capable model (teacher). The goal is to retain most of the performance while reducing model size, cost, and inference latency.

Distillation is commonly used to:

AWS AI Study Guide References:

AWS model optimization techniques

AWS knowledge distillation concepts

Submit